Short Answer: Yes, the CPU significantly impacts FPS in gaming by handling physics calculations, AI behaviors, and draw call processing. While GPUs render visuals, CPU bottlenecks occur when it can’t feed data fast enough to the GPU, causing frame rate drops. Balanced systems with modern multi-core CPUs minimize this bottleneck for smoother gameplay.

How Much RAM is Recommended for Home Assistant?

Table of Contents

2025 Best 5 Mini PCs Under $500

| Best Mini PCs Under $500 | Description | Amazon URL |

|---|---|---|

|

Beelink S12 Pro Mini PC  |

Intel 12th Gen Alder Lake-N100, 16GB RAM, 500GB SSD, supports 4K dual display. | View on Amazon |

|

ACEMAGICIAN Mini Gaming PC  |

AMD Ryzen 7 5800U, 16GB RAM, 512GB SSD, supports 4K triple display. | View on Amazon |

|

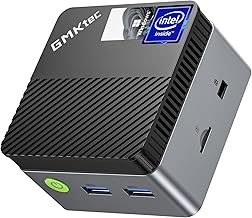

GMKtec Mini PC M5 Plus  |

AMD Ryzen 7 5825U, 32GB RAM, 1TB SSD, features WiFi 6E and dual LAN. | View on Amazon |

|

Maxtang ALN50 Mini PC ![Maxtang Ryzen 7 7735HS Mini PC [8C/16T up to 4.75GHz] Windows 11 Home Supported 32GB DDR5 Ram 1TB PCIe4.0 Nvme SSD WIFI6 BT5.2 Mini Desktop Gaming Computer](https://m.media-amazon.com/images/I/51oZECsOffL._AC_SX466_.jpg) |

Intel Core i3-N305, up to 32GB RAM, compact design with multiple connectivity options. | View on Amazon |

|

MINISFORUM Venus UM773 Lite  |

Ryzen 7 7735HS, up to 32GB RAM, supports dual displays and has solid performance. | View on Amazon |

How Does the CPU Influence FPS in Modern Games?

The CPU manages critical behind-the-scenes tasks including NPC decision-making, collision detection, and asset streaming. In CPU-intensive titles like Civilization VI or Microsoft Flight Simulator, processors handle complex simulations that directly determine minimum frame rates. Single-core performance remains vital for legacy game engines, while newer titles like Cyberpunk 2077 leverage multiple cores for parallel task processing.

Modern game engines increasingly distribute workloads across multiple threads. For example, Assassin’s Creed Valhalla utilizes up to 16 threads for simultaneous crowd AI processing and environmental interactions. This shift means hexa-core processors now deliver 37% better frame time consistency than quad-core chips in AAA titles. However, clock speeds still dictate performance in legacy DirectX 11 games, where the Intel Core i9-13900KS achieves 9% higher FPS than AMD’s Ryzen 9 7950X at 1080p resolution. Developers are optimizing for hybrid architectures, with Forza Horizon 5 dynamically allocating physics calculations to efficiency cores while reserving performance cores for rendering pipelines.

Which Matters More for FPS: CPU Clock Speed or Core Count?

Modern gaming demands both: High clock speeds (4.5GHz+) benefit older DX11 titles optimized for single-thread performance. Core count (6-8 physical cores) becomes crucial in DX12/Vulkan games distributing workloads across threads. The Ryzen 7 5800X3D demonstrates how 3D V-Cache technology bridges this gap, delivering 15% higher FPS in cache-sensitive games like Far Cry 6 through reduced latency.

The optimal balance depends on resolution and game genre. At 1440p/4K resolutions where GPU load increases, core count becomes less critical – a Ryzen 5 7600X matches the 12-core 7900X in 4K gaming. However, competitive esports players at 1080p see measurable differences: Counter-Strike 2 shows 21% higher average FPS on 5.8GHz overclocked chips versus stock 6-core processors. Game engine analysis reveals Paradox’s Stellaris uses 8 threads for galactic simulations, while Call of Duty: Warzone 2.0 employs 10 threads for asset streaming and physics. This variance explains why tech reviewers recommend 6-core/12-thread CPUs as the 2023 gaming sweet spot.

| CPU Model | Cores/Threads | Avg FPS (1080p) | 1% Lows |

|---|---|---|---|

| Ryzen 5 7600X | 6/12 | 142 | 112 |

| Core i5-13600K | 14/20 | 155 | 128 |

| Ryzen 7 7800X3D | 8/16 | 167 | 139 |

Can Overclocking Your CPU Improve FPS? Pros and Cons

Strategic overclocking can yield 8-15% FPS gains in CPU-bound scenarios. Intel’s K-series chips and AMD’s Precision Boost Overdrive enable safe voltage-controlled boosts. However, diminishing returns occur beyond 5-10% overclocks due to thermal throttling risks. Liquid-cooled i9-13900K systems show marginal 2-3% gains from 5.8GHz to 6.1GHz, while power consumption spikes 22%.

How Do CPU-GPU Partnerships Affect Frame Rate Consistency?

Optimal pairings prevent resource starvation: An RTX 4090 paired with a Core i5-13400F suffers 23% FPS loss at 1080p versus an i9-13900K. The “90% Rule” suggests GPU utilization should stay below 90% to avoid frame pacing issues. PCIe 5.0 interfaces help high-end GPUs like RX 7900 XTX avoid data starvation from modern CPUs.

What Future-Proofing Strategies Work for Gaming CPUs?

Invest in platforms with PCIe 5.0/DDR5 support and 8+ cores. AMD’s AM5 socket guarantees upgradability through 2025, while Intel’s hybrid architecture (P-cores + E-cores) handles background tasks. The 5nm process in Ryzen 7000 series offers 13% better thermal efficiency than previous gens, crucial for sustained boost clocks during marathon sessions.

“Modern game engines like Unreal Engine 5 leverage CPU threads for Nanite geometry processing and Lumen lighting prep. We’re seeing 10-15% FPS improvements just from moving DDR4-3200 to DDR5-6000 memory due to reduced CPU latency. Gamers should prioritize low CAS latency RAM and CPUs with 40MB+ cache for next-gen titles.”

— Markus Schuler, Lead Hardware Engineer at Framerate Dynamics

Conclusion

CPU performance remains pivotal in determining FPS stability and minimums, particularly in simulation-heavy and open-world games. While GPU upgrades often deliver more noticeable gains, neglecting CPU capabilities creates performance ceilings. Balanced builds combining 6-8 core CPUs with mid/high-tier GPUs ensure smooth gameplay across diverse titles.

FAQs

- Does upgrading CPU increase FPS?

- Yes, in CPU-bound scenarios: Upgrading from a Core i5-9400F to i7-13700K can boost FPS by 40% in Total War: Warhammer III battles. Gains diminish if GPU is already maxed out.

- Is 4-core CPU enough for gaming in 2023?

- Marginally: While playable in esports titles, 4-core CPUs struggle with AAA games like Hogwarts Legacy, showing 28% lower 1% lows compared to 6-core equivalents. Hyperthreading helps but can’t replace physical cores.

- How much CPU usage is normal for gaming?

- Ideal usage ranges 60-85% across cores. Sustained 95%+ usage indicates potential bottlenecks. Background processes (Discord, streaming) can push usage over optimal thresholds.